Themes

A glimpse into the world of AI

Back to all

Is AI a bubble? As we approach the second half of 2024, this has become the trillion-dollar question on everyone’s lips. Where are we in this AI-driven tech cycle? In this article we will discuss how AI will fundamentally reshape our work and life and establish whether Generative AI has the legs to turn into a multi-decade technological revolution.

First, we will briefly recap key AI development milestones since ChatGPT’s launch in November 2022. Second, we will predict some future trends of the industry. Last, we will discuss our top AI picks for Asian markets.

Scaling law and Huang’s law— Model capabilities to scale up while hardware cost to scale down

To begin with, we have advanced rapidly in our pursuit of AGI (Artificial General Intelligence). In 2019, our best model was GPT-2, which could barely produce a few coherent sentences. Fast forward to 2024, and our current top performer, GPT-4, produces lengthy code snippets and reasons through complex math problems. The magic behind this stellar leap in model performance is the scaling law, which states that by using orders of magnitude more of data and computing resources to train our models, we can expect significant improvements in their capabilities. This has motivated cash-rich tech giants like Microsoft, Google and Meta to build unprecedentedly-large training clusters, as they race against each other to approach the holy grail of building AGI. At the core of these datacentres are Nvidia’s GPUs, as they offer best-in-class performance and energy efficiency.

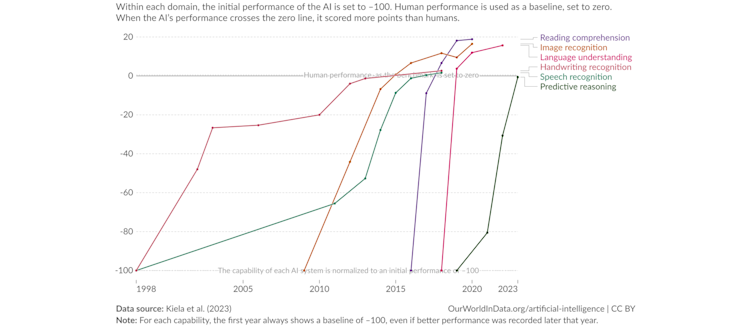

Test scores of AI systems on various capabilities relative to human performance

Source: Our World in Data, 2024

There are 2 main phases in deploying AI systems. First, training is needed to obtain a model that can be used for different purposes. Then, inference is conducted every time the trained model is accessed. For example, each time we ask ChatGPT a question, it carries out one inference task.

On the training side, total training costs for the latest generation model continue to surge. The scaling law commands that models will rapidly increase in parameter count and training data, requiring much more computing resources (GPUs, networking, etc) to train the next-generation model. For example, to train GPT-4, the current state of the art model, we need 8000 Nvidia’s Hopper GPUs. However, to train GPT-5, roughly 100,000 Hopper GPUs are needed, representing a 10x increase in hardware cost. While current infrastructure is mostly limited to adopting Nvidia’s solutions, in the future as more GPU or Application Specific Integrated Circuit vendors compete against Nvidia, there is more room for hardware cost improvement.

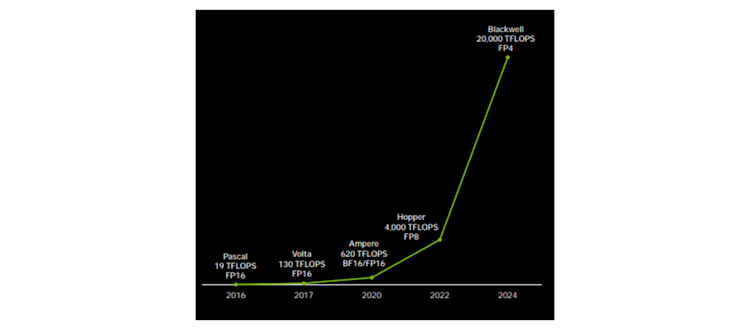

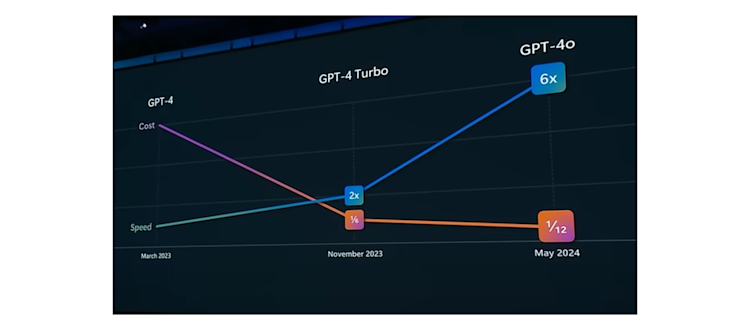

On the inference side, the cost is declining quickly. The cost of inference for GPT-4 today is just one-twelfth of what it was a year ago, driven by both enhanced hardware performance and better software optimisations. Huang’s law, named after Jensen Huang, exerts that the computing power of each GPU chip doubles every year, amounting to a 1000-fold increase over a decade. Software techniques like quantisation—representing model parameters with lower-precision figures—have become critical drivers and can leverage on hardware gains to reduce inference cost. Thus, while the cost of training the latest model is steadily on rise, the inference cost will gradually decrease, which paves way for wider LLM adoption.

1000x AI compute in 8 years

Source: Nvidia GTC, 2024

Speed and cost improvements from GPT-4 to GPT-4o

Source: Microsoft Build, 2024

We are less worried about data sufficiency

As models rapidly grow in size, more people are beginning to question whether we have sufficient data to train future models. Currently, we have effectively utilised all public text data for training, which has been the single biggest data source. However, there are still many methods to extract more data to support the needs of the scaling law. Enterprises keep their proprietary data within their data lakes, so model companies like OpenAI are actively negotiating with private firms to make use of their data. On top of that, we can use legacy models (eg GPT-3.5) to produce data to train future models, known as synthetic data. This is analogous to how AlphaZero, the AI model that reached a superhuman level at Go, has iteratively become better at the game by playing against itself. To mimic humans that can learn complex concepts with just a handful of examples, neuromorphic techniques like experience replay are also employed, which allows the model to develop more insights using the same set of data. While data sufficiency continues to be a contentious topic, we are less worried about the problem for at least the next 2 to 3 years.

Abundant Gen AI applications reshape our work and life

Currently, generative AI is already reshaping our working and living habits. Internet giants are using DLRM (Deep Learning Recommendation Models) to suggest more relevant content to users, such as ads or social posts. Companies have been using large language models (LLMs) to assist with tedious tasks like translation or text summarisation. Programmers are using coding copilots to accelerate application development, catch bugs and suggest improvements. In the future, better AI performance coupled with lower inference cost will undoubtedly unlock new markets that we have not yet conceived of, like how the proliferation of Internet led to e-commerce platforms. Generative AI will also be one of the key drivers of economic growth. Goldman Sachs estimates that the technology will account for a 0.4pp increase in GDP growth in the U.S., 0.2-0.4pp in other developed markets, and 0.1-0.2pp in advanced emerging markets over the next decade.

AI develops multimodal understanding and generation

How will AI continue to evolve? The first step will be to bring more sensory organs to AI. We are not only training the models on texts, but also on various other forms of data, such as images, audio, and video, to make the model smarter. Andrew Parker, a renowned zoologist, has proposed that, during the Cambrian explosion, the emergence of vision was crucial for early animals to not only find food and avoid predators, but also to evolve and improve. Similarly, allowing AI to see data beyond plain text should drive further breakthroughs. In his 1952 theory, the Swiss psychologist Jean Piaget suggests children develop cognitive capabilities through sensory experiences such as sight, hearing, touch, taste, and smell, and through interactions with the physical world. Although today AI systems are still limited in sensory perception capabilities, the development of humanoid robots powered by LLMs will likely provide increased and enriched interactions between the models and the physical world, making us excited about the long-term intelligence potential of AI.

AI develops System-2 step-by-step reasoning capabilities

We have also been exploring how models can think more like humans. For example, researchers are incorporating methods such as “chain of thought prompting” to enable the models to think step-by-step when solving a complex problem. This method is analogous to System-2 thinking, which refers to slow, deliberate, cognition process, in contrast to System-1 thinking, which is fast, automatic, and intuitive thinking (more analogous to how LLMs function today). Researchers have found that when using chain of thought prompting, model performance improves on arithmetic reasoning, symbolic reasoning, and commonsense reasoning.

AI agent as promising vehicle to pursue AGI

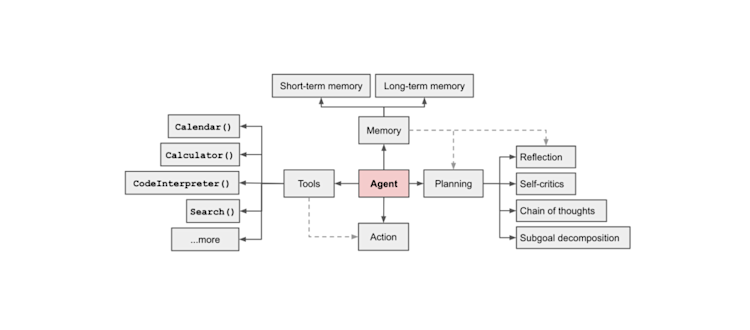

The lower cost of computing, advancements in multi-modal understanding and generation, more efficient model architectures, and enhanced reasoning and planning capabilities will all contribute to the development of advanced AI agents. An AI agent is considered a promising vehicle to pursue human-level artificial intelligence. Open AI’s Lilian Weng describes an LLM-powered AI agent as a system with an LLM as brain, equipped with both short-term and long-term memory, capable of planning with multiple steps, and able to use tools such as API calls. Some early proof-of-concept demos, such as Devin, shows the potential of an AI agent that is designed to individually solve complex coding tasks. Researchers are also developing multi-agent systems, as they discovered that a agent specialising on a narrow domain of task outperforms generalist agents, analogous to the “division of labour” view first brought up by Adam Smith. In the future, we believe we will envision the harmonious co-existence between multi-agent systems and humans.

Overview of an LLM-powered autonomous agent system

Source: Lilian Weng, 2023

AI drives transformational change in robotics

AI also drives transformational change in robotics, which will unlock unlimited resources and productivity gains, creating a seismic shift in the global economy. Thanks to LLMs, researchers have given robots capabilities such as vision, planning, and the ability to interact with humans. For instance, a team at Google developed a project in which the robot can act by reasoning through the current states of the environment and its abilities. When given the prompt “I spilled my coke, can you help?” the robot assesses which available tools in the environment might be useful and what actions it can possibly take. It can then pick up a sponge to help, instead of something irrelevant.

A heterogeneous world where large models and small models co-exist

Models need to be commercialised and put into products. People are making specialised small language models tailored for specific tasks or workflows. For example, Apple uses a ~3 billion parameter on-device model trained for/specialised in specific tasks like summarisation, proofreading, or generating mail replies. Techniques to make the large models into smaller models such as distillation or pruning have greatly evolved. We see the future as a heterogeneous world where researchers continue to develop more competent cloud-based models with more emergent behaviors, while smaller edge-based models with better security and lower latency are deployed on our PC and mobile devices.

Taiwan, Korea and Japan are at the heart of the supply chain

How can you participate in this AI journey? Many investors might think they can only invest in US companies to benefit from this, however, there are many Asian companies enabling the great journey of AI development. Taiwan, Korea and Japan at the heart of the supply chain. Let’s take a quick look at several examples.

TSMC (2330-TW)holds a de facto monopoly position in the manufacturing of the Nvidia GPU chips—the custom silicon that the US cloud providers are utilising—and the networking chips. In addition, it supports a broader AI theme by manufacturing chips for mobile phones and PCs, where AI applications are increasingly manifesting.

SK Hynix (000660-KR)is the biggest high bandwidth memory (HBM) chips provider in the world. These specialised memory chips deal with the high bandwidth required for the training and inference of today’s AI models.

Disco (6146-JP) is the world leader in grinders and dicers used in the manufacturing of semiconductor chips. Disco’s machines play a critical role in the production of these highly sophisticated HBM chips, which requires grinding the chips to an ultra-thin level. Only machines that exert high precision and high flatness are able to do that. With its 50 years of history in precision semiconductor equipment, Disco is a dominant force in enabling the manufacturing of HBM chips.

Conclusion: We are optimistic on Generative AI

Many researchers believe digital neural networks are conceptually similar to biological brains. Parameters of models are analogous to brains’ synapses, or the connection between neurons. A human brain has 100 trillion synapses. The greater the number of synapses, the higher the resulting intelligence. Today, GPT-4 is believed to have 1.8 trillion parameters, and scaling up to 100 trillion is the direction where we want to test the limit of scaling law.

To conclude, while there continues to be skepticism around the sustainability of the scaling law and the commercialisation timeline of Generative AI, we are still optimistic about the overall trend. Hardware costs will continue to decline under Huang’s law, enabling a wider adoption of generative AI. On top of that, software breakthroughs like training with more modalities of data or allowing the model to self-train, unlock more capabilities. The advancement of physical AI might be the last missing piece in realising AGI, as robots start to seamlessly integrate into our society to solve more complicated tasks.

Disclaimer

Degroof Petercam Asset Management SA/NV (DPAM) l rue Guimard 18, 1040 Brussels, Belgium l RPM/RPR Brussels l TVA BE 0886 223 276 l

Marketing Communication. Investing incurs risks.

The views and opinions contained herein are those of the individuals to whom they are attributed and may not necessarily represent views expressed or reflected in other DPAM communications, strategies or funds.

The provided information herein must be considered as having a general nature and does not, under any circumstances, intend to be tailored to your personal situation. Its content does not represent investment advice, nor does it constitute an offer, solicitation, recommendation or invitation to buy, sell, subscribe to or execute any other transaction with financial instruments. Neither does this document constitute independent or objective investment research or financial analysis or other form of general recommendation on transaction in financial instruments as referred to under Article 2, 2°, 5 of the law of 25 October 2016 relating to the access to the provision of investment services and the status and supervision of portfolio management companies and investment advisors. The information herein should thus not be considered as independent or objective investment research.

Past performances do not guarantee future results. All opinions and financial estimates are a reflection of the situation at issuance and are subject to amendments without notice. Changed market circumstance may render the opinions and statements incorrect.