Equity

Outlook 2026: ten reasons we are not in an AI bubble

Back to all

Everyone is asking the same question: is AI the new dot-com bubble? Stock prices are climbing, headlines are growing louder, and the hype is hard to miss. But under the surface, this cycle does not necessarily behave like a bubble that’s ready to burst. It looks much more like the early days of smartphones or cloud computing: large-scale adoption, real productivity gains, and hard physical bottlenecks that prevent oversupply.

Here’s why we think the AI cycle still has years to run.

1. Adoption first, monetisation later (but the ROI is already obvious)

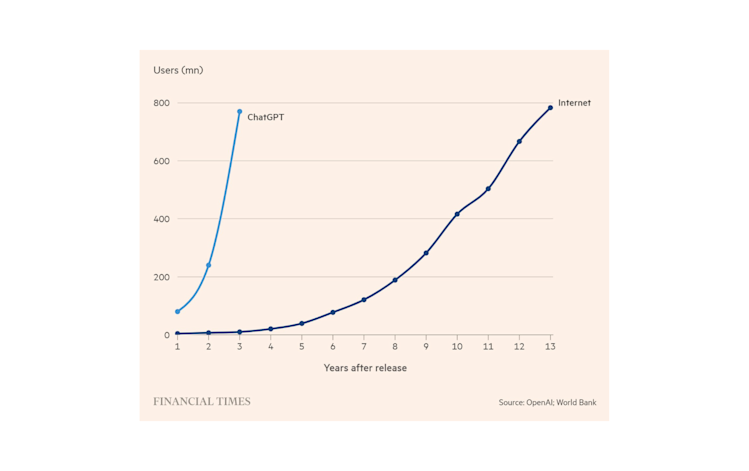

Internet platforms always follow the same script: onboard users first and monetise later at scale.

Facebook is the classic case. At its IPO in 2012, it had around a billion users, trading at about 100x expected earnings and widely dismissed as a bubble. It had barely monetised mobile and average revenue per user (ARPU) in developed markets was roughly 8 dollars per year. Twelve years on, ARPU is now close to 200 dollars, and the share price has multiplied roughly sixteen-fold. Monetisation may have lagged adoption by years, but it did arrive.

ChatGPT feels eerily similar. It is rapidly approaching 1 billion weekly users and sits at the centre of people’s professional and personal lives. Users trust it with real tasks, such as drafting, coding, shopping decisions, life choices, analysis. For many professionals, 20 dollars a month already feels cheap relative to the time saved. Free users, in turn, would likely accept targeted advertising in exchange for that value.

ChatGPT’s growth versus the internet

Source: The Financial Times, 2025

If a mature AI assistant ecosystem can earn around 10 dollars a month from each engaged user, one billion of those users implies revenue potential of roughly 120 billion dollars a year. This is standard platform economics.

However, building a global platform is expensive. Netflix, Spotify and Uber all burned cash for years before scaling. AI platforms are in that same ‘build and learn’ phase. You need to onboard users, improve the experience, capture data, strengthen the product and out-innovate deep-pocketed competitors (hello, Google).

Meanwhile, the user-side return on investment is already real and measurable. As models get closer to human expert levels (see below), the impact has quickly moved from ‘nice demo’ to ‘critical infrastructure’. Its impact is already clearly visible across the real economy: in healthcare, models read scans, draft reports and triage cases; in knowledge work, research, coding, marketing and legal teams offload hours of routine work to AI; in industry and robotics, vision models inspect products and guide machines; and in customer service, where agents handle the bulk of simple queries before a human ever steps in. AI cuts costs, raises throughput and compresses time-to-decision.

2. Returns track earnings

In a real valuation bubble, prices detach from reality. Late-1990s internet stocks were characterised by soaring share prices with no earnings behind them. At the end of 1999, the MSCI World IT traded above 50 times earnings.

Global IT sector at dot-com (indexed to 1997)

Source: Bloomberg, MSCI, 2025

This time, the picture is different. Across the IT sector, price performance has broadly followed earnings growth. Share prices have gone up sharply, profits have generally followed. There have been pockets of exuberance, of course, but at the index level, this is not a case of purely narrative-driven returns.

Global IT sector today (indexed to 2022)

Source: Bloomberg, MSCI, 2025

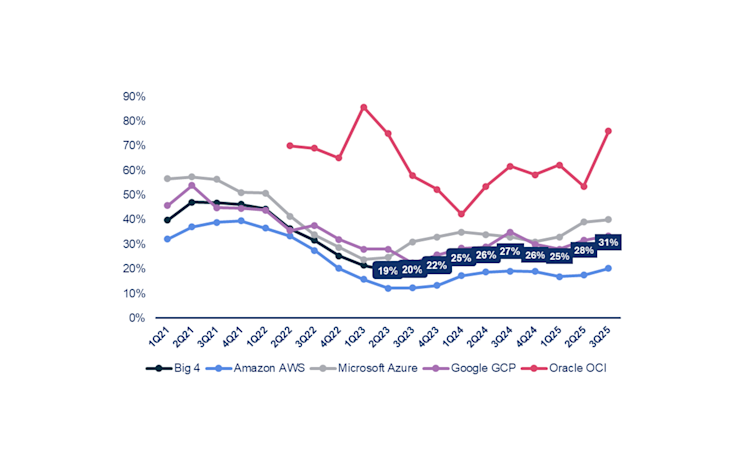

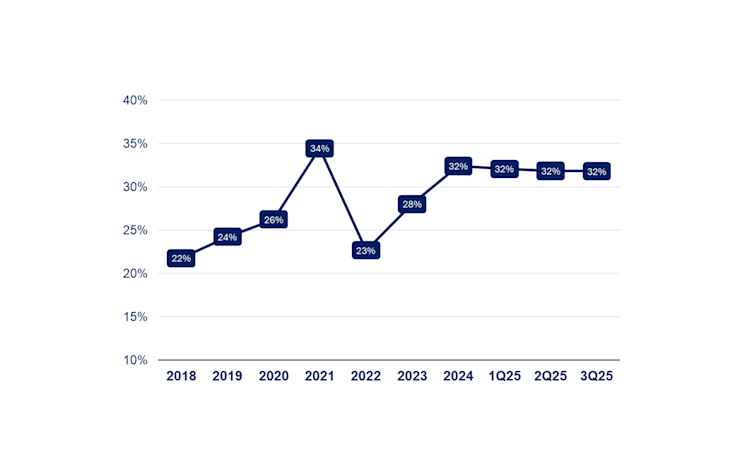

In the long run, stock prices follow earnings per share. So far, AI-exposed technology has behaved like a strong growth story instead of classic mania. Microsoft Azure, Amazon Web Services, Google Cloud and Oracle Cloud Infrastructure have all accelerated. Any new capacity that comes online is immediately sold. These companies, on a USD 300 billion revenue base, have accelerated to 31% topline growth, while profitability has remained high.

Cloud revenue growth YoY

Source: Bloomberg, 2025

Hyperscalers return on equity (Alphabet, Amazon, Meta, Microsoft)

Source: Bloomberg, 2025

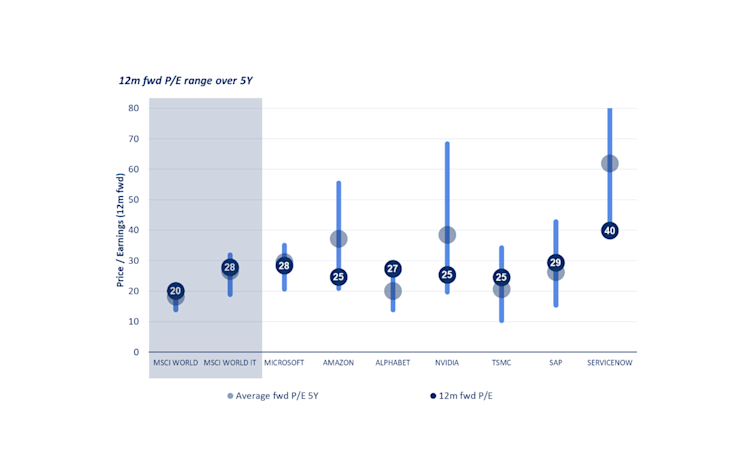

3. Valuation comes at a (reasonably-priced) premium

As a consequence, valuations are still reasonable. Yes, the big AI leaders trade at a substantial premium to the broader market. But that premium can be explained by their ability to combine faster growth, higher margins, strong competitive moats and very low leverage. Paying a premium for such profiles seems only logical.

12 month forward P/E range over 5 years

Source: Bloomberg, 2025

Crucially, most of these companies still trade around their own five-year average valuation multiples. If they manage to grow earnings materially faster than the market over the next three to four years (which we believe they will), we expect them to be trading on a market multiple by then, despite stronger future growth.

The semiconductor industry shows how much room remains. Even including Nvidia, it represents only about half a percent of global GDP. For a technology at the heart of the AI build-out, that leaves significant room for above-trend growth.

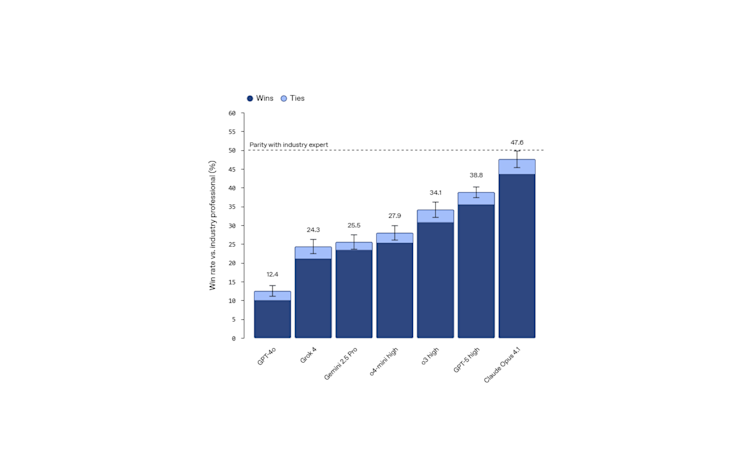

4. Models are fast approaching human expert levels

We are not at a steady state in model quality. The new generation of ‘reasoning models’ is materially narrowing the gap with human experts on many tasks.

In simple terms, these models do not just spit out a single answer. They ‘think’ through a problem multiple times, revisiting and refining their own initial response. That process cuts hallucinations, improves reliability and opens the door to more demanding use cases.

GDP valuation win rate: performance on economically valuable tasks

Source: GDPVal OpenAI paper, 2025

The business implication is straightforward: as capability rises, more workflows become viable to automate or augment. That means more usage, deeper integration into organisations, and, ultimately, more revenue. The chart clearly shows how, in only a year AI models have closed much of the gap with human experts.

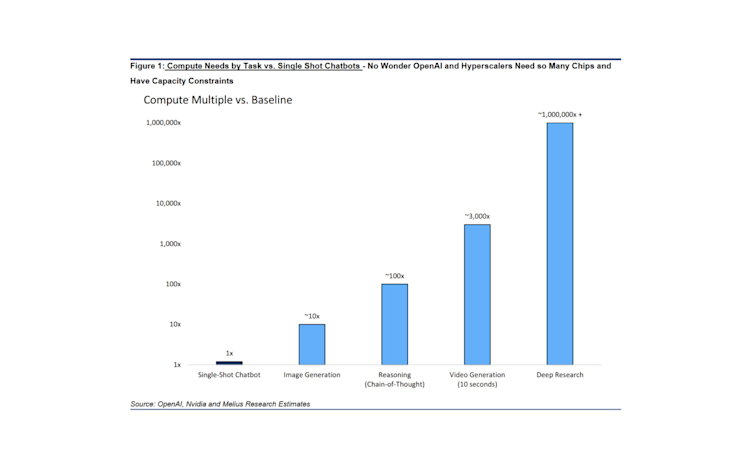

5. More compute for advanced AI

Importantly, these reasoning models require far more computing power.

Each new frontier in AI follows the same pattern. Reasoning, autonomous agents, image & video generation, and deep research over large text corpora all demand an order of magnitude more compute than the generation before them.

Compute needs by task versus single shot challenges

Source: OpenAI, Nvidia and Melius Research estimates, 2025

Take deep research: a broad range of companies now feed large collections of reports, filings and notes into AI systems and request structured, comparative insight on specific topics. Work that once required weeks of manual effort can now be produced in about twenty minutes.

A year ago, a single deep research project might have cost around 200 dollars in compute. As cost per token falls, those same projects become cheaper, and crucially, we can afford to do many more of them. That’s the core point: as the cost of AI drops, the volume of AI skyrockets.

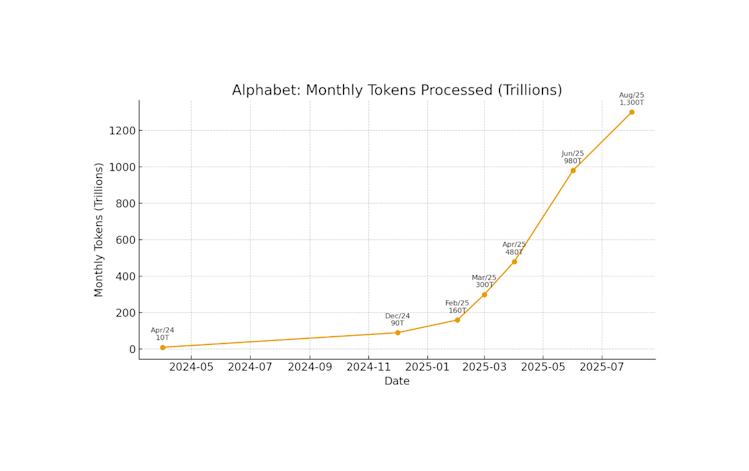

6. Consumption doubles every three months

The best measure of AI demand is the number of tokens processed. These tokens represent the raw units of work handled by models. And, as we already established, the volume of AI is skyrocketing.

Between December 2024 and August 2025, Google reported roughly a fourteen-fold increase in AI token usage in just eight months, implying a doubling roughly every three months. According to a CNBC article, Google expects to double AI serving capacity about every six months just to keep up.

Alphabet: monthly tokens processed (trillions)

Source: ChatGPT deep research on public communications, 2025

This is what exponential adoption looks like: few user bottlenecks, strong incentives to experiment, and quick, tangible productivity gains. When demand grows this fast and supply struggles to keep pace, you get a multi-year investment supercycle.

7. Supply is the real constraint

A bubble usually comes with one obvious feature: critical levels of oversupply. Too many fibre networks, too many speculative data centres, too many factories. During the dot-com bubble, 97% of dark fibre was never used

AI today has the opposite problem. Today, all GPUs are running at 100%, even the A100, 6 year old GPUs. Moreover, bottlenecks are limiting how fast supply can expand, making a boom-bust highly unlikely.

Power is the most pressing bottleneck. The US has under-invested in generation capacity for decades. Now, hyperscale data centres need vast, continuous power. Everything is being reconsidered: solar with battery storage, nuclear, even a gas turbine renaissance. Some suppliers already claim to be effectively sold out for years.

On the chip side, TSMC, a company normally known for its measured language, has called AI demand ‘insane’ and says advanced-node capacity is roughly three times short of where customers would like it to be. Memory and high-bandwidth components are similarly tight.

As the dominant player in advanced manufacturing, TSMC can afford to take a measured approach to expansion. It wants proof of durable demand before committing to another large capex wave and likely prefers to stay on the safe side.

That cautious approach, combined with the physical bottlenecks in power and infrastructure, acts as a natural brake on over-investment and makes a violent oversupply bust less likely. Cooling, land and grid connections are additional constraints, requiring careful multi-year planning.

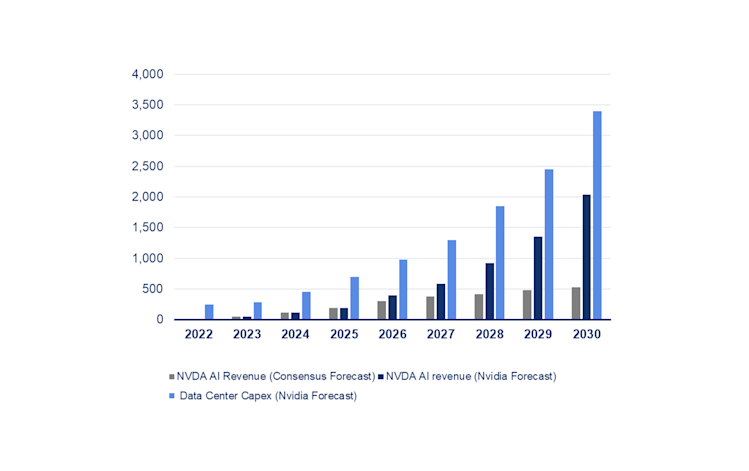

8. 40% annual growth potential for data centre capex

Nvidia’s own expectations for the AI build-out are striking. It sees global data centre capex growing at roughly 40% per year through 2030.

Nvidia AI revenue versus data centre capex

Source: Nvidia, Bloomberg, 2025

By contrast, market expectations for AI revenue growth often sit closer to twelve percent annually over the same period. For 2030, that is a large gap. If Nvidia is even roughly correct, consensus is underestimating the size and duration of the AI capex cycle.

Even with that backdrop, Nvidia trades at about twenty five times price to earnings on 2026 estimates. It is a premium valuation, but nowhere near the extremes seen in past bubbles. You could argue that even the lower consensus growth path is not fully reflected in current pricing, let alone Nvidia’s more ambitious outlook.

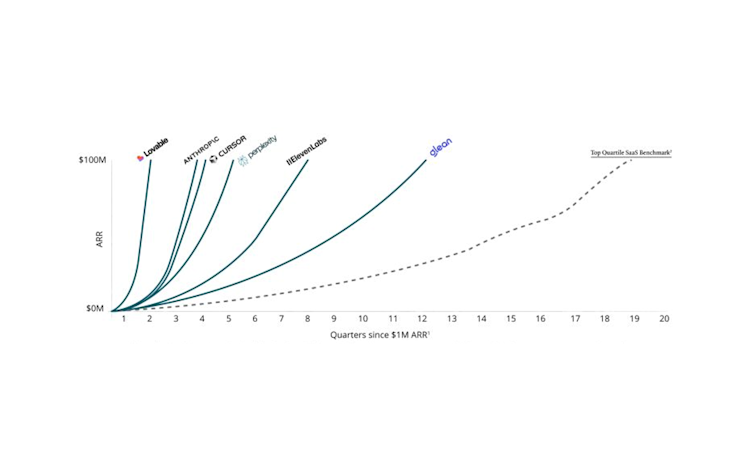

9. AI natives are scaling at record speed

If we look beyond the Big Tech success stories, AI-native software companies are also growing much faster than previous generations of SaaS.

Iconiq’s State of Software 2025 shows AI-first startups reaching USD 100 million in annual recurring revenue within one to two years. Historically, even top-quartile software names took two to three times longer to hit that milestone.

Quarters from USD 1 million to USD 100 million ARR

Source: Iconiq's 'State of Software 2025', 2025

That speed tells us customers willing to pay for AI. It also suggests a strong future pipeline of listed ‘AI winners’ beyond the current mega-caps.

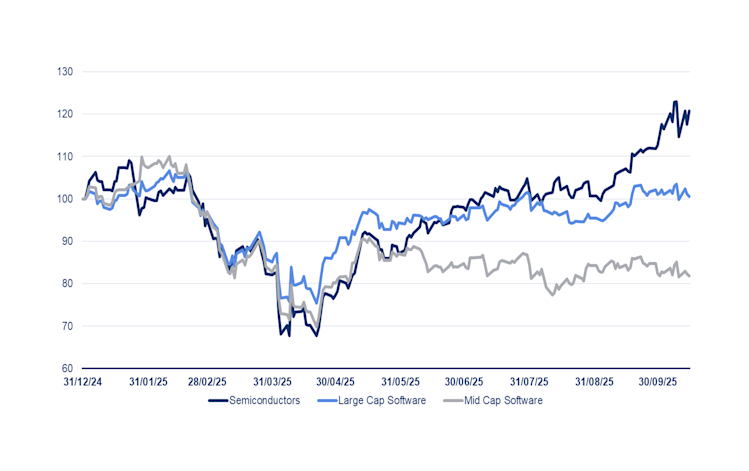

10. SaaS stands to gain (but is priced for disruption)

Since Sam Altman’s tweet about the ‘fast fashion’ era of SaaS, software stocks have materially underperformed semiconductors. The market seems convinced that AI will hollow out much of the application layer.

Performance of different buckets

Source: DPAM, 2025

There is some truth here for undifferentiated tools. Simple apps will be easier to replicate in an AI world, and pricing pressure is likely. But that is not the whole SaaS universe.

Core systems of record, such as ERP, CRM, HCM, finance platforms, are deeply embedded in operations. They are critical for compliance, security and interoperability. Replacing them is costly, risky, and rarely offers a real competitive edge. For most companies, it makes far more sense to build AI agents and automation on top of these systems than to rip them out.

We expect the winners in SaaS to be those that embrace AI as a co-pilot layer, automating tasks historically done by developers, analysts and operators, while continuing to anchor the underlying data and workflows. Yet many of these names are currently priced as if they will be fully disrupted rather than enhanced.

What could derail this?

That is not to say that the AI cycle will be a straight line. Pricing power could erode if base models commoditise faster than expected. This forms a possible headwind for model vendors, but presents a tailwind for infrastructure providers, which underpins our positive stance there. Regulation and data rules may slow adoption in sensitive sectors. Geopolitical risks exist around chips, Taiwan and critical materials can tighten supply.

And the capital intensity of the build-out means misallocated capex will be punished. These are the main swing factors in our view, but they change the tempo of the cycle more than the direction: the productivity gains are already visible and once embedded in workflows they are hard to roll back.

The early innings of a supercycle

Put all of this together and the picture is clear:

1. Monetisation is deliberately lagging adoption, as it did in past platform shifts.

2. Earnings growth is backing up share price performance.

3. Valuations are elevated but far from euphoric relative to the fundamentals.

4. Model quality is still improving rapidly.

5. Compute needs keep increasing

6. Token demand are compounding at extraordinary rates.

7. Power, chips, land and cooling are hard constraints rather than areas of overbuild.

8. Data centre capex is likely under-estimated.

9. AI-native companies are scaling revenues faster than any previous software cohort.

10. Core SaaS, a key AI beneficiary, is priced with a very bearish disruption narrative.

In our view, this clearly forms the profile of a long and powerful AI investment cycle, characterised by occasional dispersions, but, above all, plenty of opportunities.

Disclaimer

Degroof Petercam Asset Management SA/NV (DPAM) l rue Guimard 18, 1040 Brussels, Belgium l RPM/RPR Brussels l TVA BE 0886 223 276 l

Marketing Communication. Investing incurs risks.

The views and opinions contained herein are those of the individuals to whom they are attributed and may not necessarily represent views expressed or reflected in other DPAM communications, strategies or funds.

The provided information herein must be considered as having a general nature and does not, under any circumstances, intend to be tailored to your personal situation. Its content does not represent investment advice, nor does it constitute an offer, solicitation, recommendation or invitation to buy, sell, subscribe to or execute any other transaction with financial instruments. Neither does this document constitute independent or objective investment research or financial analysis or other form of general recommendation on transaction in financial instruments as referred to under Article 2, 2°, 5 of the law of 25 October 2016 relating to the access to the provision of investment services and the status and supervision of portfolio management companies and investment advisors. The information herein should thus not be considered as independent or objective investment research.

Past performances do not guarantee future results. All opinions and financial estimates are a reflection of the situation at issuance and are subject to amendments without notice. Changed market circumstance may render the opinions and statements incorrect.